Understanding the Power of Normal Equation and Batch Gradient Descent Methods 📈

Dear Student,

Here is the supplementary documentation for the upcoming lab session. This session will explore the complex details of two essential machine-learning techniques: the normal equation and batch gradient descent. These methods are crucial tools in our goal of predictive modeling and data analysis expertise. You can expect to gain a more in-depth understanding of these techniques and their applications in this session.

The agenda for this session.

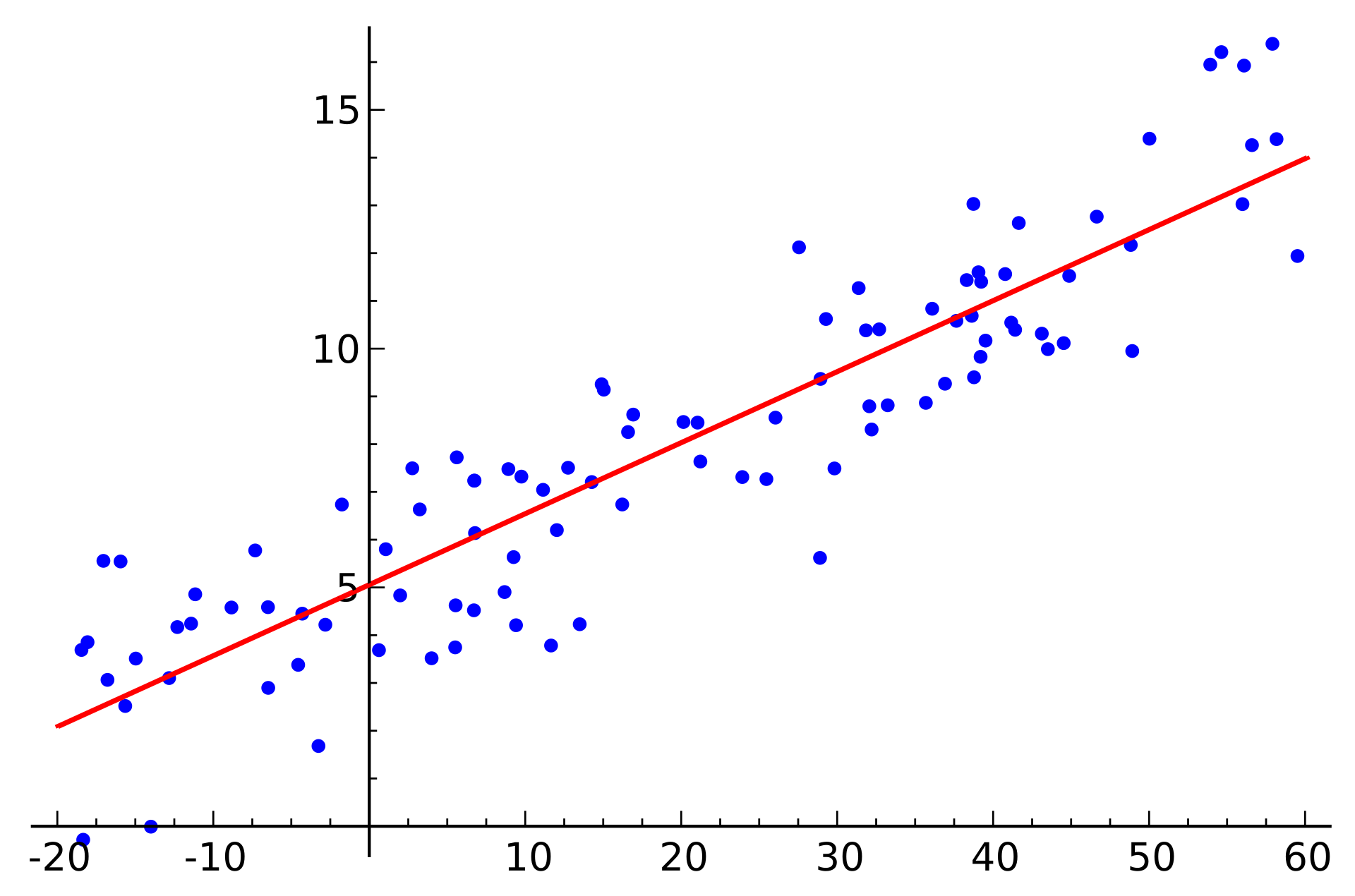

1. Understanding the Normal Equation 📝: The Normal Equation provides a closed-form solution to linear regression problems, allowing us to find the optimal parameters directly. In this session, we'll explore the mathematical formulation of the Normal Equation and its advantages in solving linear regression tasks. We'll demonstrate how to apply the Normal Equation in matrix form to handle large datasets and complex models efficiently.

2. Introducing the Polynomial Features Model 💻: The Polynomial Features Model is a versatile technique for capturing non-linear relationships in data by introducing polynomial terms into linear models. In this session, we'll also explore how to integrate polynomial features into linear regression models and demonstrate their effectiveness in modeling complex data patterns. You'll learn to select appropriate polynomial degrees and interpret the results of polynomial regression analyses.

3. Unraveling Batch Gradient Descent 📊: Batch Gradient Descent is a robust optimization algorithm commonly used in machine-learning to minimize loss or cost functions and find the optimal parameters of a model iteratively. In this segment, we'll dive deep into the mechanics of Batch Gradient Descent, discussing how it works, its convergence properties, and practical considerations for implementation. Hands-on exercises will give you practical experience in implementing Batch Gradient Descent from scratch and fine-tuning its hyperparameters for optimal performance.

4. Practical Applications and Case Studies 🔬: To reinforce your understanding of these techniques, we'll explore real-world applications and case studies where the Normal Equation, Batch Gradient Descent, and Polynomial Features Model have been successfully employed. Using a carefully selected dataset, you'll discover what can be applied to solve these method problems across various domains.

How to Access the Video

- Click on the following link to watch the lab session recording on our 📹 YouTube channel: ➡️ Normal Equation and Batch Gradient Descent Methods.

- The complete scripts developed during the video are available in the Gitea repository for the NE & BGD methods 🗄️.

Join Us:

- Subscribe to Our Channel for more tutorials and updates 🤟.

- Visit my website at https://gmarx.itmorelia.com to stay connected with our community 🌐.

- If you have questions, use our dedicated service at https://answer.itmorelia.com.

Happy coding,

Gerardo Marx

Lecturer